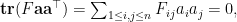

In this post, we study a few probability one results conerning the rank of random matrices: with probability one,

- a random asymmetric matrix has full rank;

- a random symmetric matrix has full rank;

many random rank one positive semidefinite matrices (belonging to

many random rank one positive semidefinite matrices (belonging to  ) are linearly independent.

) are linearly independent.

At least for the first two, they look quite natural and should be correct at first glance. However, to rigorously prove them does require some effort. The post is trying to show that one can use the concept of independence to prove the above assertions. Indeed, all the proof follows an inductive argument where independence makes life much easier:

- Draw the first sample, it satisfies the property we want to show,

- Suppose first

samples satisfy the property we want to show, draw the next sample. Since it is independent of the previous

samples satisfy the property we want to show, draw the next sample. Since it is independent of the previous  samples, the first

samples, the first  samples can be considered as fixed. Then we make some effort to say the first

samples can be considered as fixed. Then we make some effort to say the first  sample does satisfy the property.

sample does satisfy the property.

Before we going into each example, we further note for any probability measure that is absolutely continuous respect to the measure introduced below and vice versa (so independence is not needed), the probability  results listed above still hold.

results listed above still hold.

1. Random Matrices are full rank

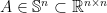

Suppose  with

with ![{A=[a_{ij}]}](https://s0.wp.com/latex.php?latex=%7BA%3D%5Ba_%7Bij%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) and each entry of

and each entry of  is drawn independently from standard Gaussian

is drawn independently from standard Gaussian  . We prove that

. We prove that  has rank

has rank  with probability

with probability  .

.

To start, we should assume without loss of generality that  . Otherwise, we just repeat the following argument for

. Otherwise, we just repeat the following argument for  .

.

The trick is to use the independence structure and consider columns of  are drawn from left to right. Let’s write the

are drawn from left to right. Let’s write the  -th column of

-th column of  by

by

For the first column, we know  is linearly independent because it is

is linearly independent because it is  with probability

with probability  . For the second column, because it is drawn independently from the first column, we can consider the first column is fixed, and the probability of

. For the second column, because it is drawn independently from the first column, we can consider the first column is fixed, and the probability of  fall in to the span of a fixed column is

fall in to the span of a fixed column is  because

because  has a continuous density in

has a continuous density in  . Thus the first two columns are linearly independent.

. Thus the first two columns are linearly independent.

For general  , because

, because  , we know the first

, we know the first  columns forms a subspace in

columns forms a subspace in  and so

and so  falls into that subspace with probability

falls into that subspace with probability  (linear subspace has Lebesgue measure

(linear subspace has Lebesgue measure  in

in  and Gaussian measure is absolutely continuous with respect to Lebesgue measure and vice versa). Thus we see first

and Gaussian measure is absolutely continuous with respect to Lebesgue measure and vice versa). Thus we see first  columns are linearly independent. Proceeding the argument until

columns are linearly independent. Proceeding the argument until  completes the proof.

completes the proof.

2. Symmetric Matrices are full rank

Suppose  with

with ![{A=[a_{ij}]}](https://s0.wp.com/latex.php?latex=%7BA%3D%5Ba_%7Bij%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) and each entry of the upper half of

and each entry of the upper half of  ,

,  , is drawn independently from standard Gaussian

, is drawn independently from standard Gaussian  . We prove that

. We prove that  has rank

has rank  .

.

Let’s write the  -th row of

-th row of  by

by  . For the first

. For the first  entries of the

entries of the  -th row, we write

-th row, we write  . Similarly,

. Similarly,  denotes the first

denotes the first  entries of the

entries of the  -th column. For the top left

-th column. For the top left  submatrix of

submatrix of  , we denote it by

, we denote it by  . Similar notation applies to other submatrices.

. Similar notation applies to other submatrices.

The idea is drawing each column of  from left to right sequentially and using the independence structure. Starting from the first column, with probability one, we have that

from left to right sequentially and using the independence structure. Starting from the first column, with probability one, we have that  is linearly independent (meaning

is linearly independent (meaning  ).

).

Now since  is drawn and the second column except the first entry is independent of

is drawn and the second column except the first entry is independent of  , we know it is in the span of

, we know it is in the span of  if the second column

if the second column  is

is  (

( is

is  with probability 0) multiple of

with probability 0) multiple of  . This still happens with probability

. This still happens with probability  since each entry of

since each entry of  has continuous probability density and is drawn independently from

has continuous probability density and is drawn independently from  .

.

Now assume we have drawn the first  columns of

columns of  , the first

, the first  columns are linearly independent with probability

columns are linearly independent with probability  , and the left top

, and the left top  submatrix of

submatrix of  is invertible (the base case is what we just proved). We want to show after we draw the

is invertible (the base case is what we just proved). We want to show after we draw the  column, the first

column, the first  columns are still linearly independent with probability

columns are still linearly independent with probability  . Since the first

. Since the first  columns is drawn, the first

columns is drawn, the first  entries of the

entries of the  column

column  is fixed, we wish to show the rest

is fixed, we wish to show the rest  entries which are drawn independently from all previous

entries which are drawn independently from all previous  columns will ensure linearly independence of first

columns will ensure linearly independence of first  columns.

columns.

Suppose instead  is linearly dependent with first

is linearly dependent with first  columns of

columns of  . Since the left top

. Since the left top  submatrix of

submatrix of  is invertible, we know there is only one way to write

is invertible, we know there is only one way to write  as a linear combination of previous columns. More precisely, we have

as a linear combination of previous columns. More precisely, we have

This means  has to be a fixed vector which happens with probability

has to be a fixed vector which happens with probability  because the last

because the last  entries of

entries of  is drawn independently from

is drawn independently from  ,

,  Note this implies that the first

Note this implies that the first  columns of

columns of  are linearly independent as well as the left top

are linearly independent as well as the left top  submatrix of

submatrix of  is invertible because we can repeat the argument simply by thinking

is invertible because we can repeat the argument simply by thinking  is

is  (instead of

(instead of  ). Thus the induction is complete and we prove

). Thus the induction is complete and we prove  indeed has rank

indeed has rank  with probability

with probability  .

.

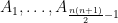

3. Rank  positive semidefinite matrices are linearly independent

positive semidefinite matrices are linearly independent

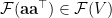

This time, we consider  ,

,  are drawn independently from standard normal distribution in

are drawn independently from standard normal distribution in  . Denote the set of symmetric matrices in

. Denote the set of symmetric matrices in  as

as  . We show that with probability

. We show that with probability  the matrices

the matrices

are linearly independent in  .

.

Denote the standard  -th basis vector in

-th basis vector in  as

as  . First, let us consider the following basis (which can be verified easily) in

. First, let us consider the following basis (which can be verified easily) in  ,

,

We order the basis according to the lexicographical order on  . Suppose

. Suppose  follows standard normal distribution in

follows standard normal distribution in  , we prove that for any fixed linear subspace

, we prove that for any fixed linear subspace  with dimension less than

with dimension less than  , we have

, we have

We start by applying an fixed invertible linear map  to

to  so that the basis of

so that the basis of  is the first

is the first  many basis vector of

many basis vector of  ({in lexicographical order.}), and the basis of the orthogonal complement (defined via the trace inner product) of

({in lexicographical order.}), and the basis of the orthogonal complement (defined via the trace inner product) of  ,

,  , is mapped to the rest of the basis vector of

, is mapped to the rest of the basis vector of  under

under  . We then only need to prove

. We then only need to prove

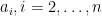

We prove by contradiction. Suppose  . It implies that there exists infinitely many

. It implies that there exists infinitely many  such that

such that  . Moreover, each component of

. Moreover, each component of  takes infinitely many values. We show such situation cannot occur. Denote the

takes infinitely many values. We show such situation cannot occur. Denote the  -th largest element in

-th largest element in  as

as  . Since

. Since  has dimension less than

has dimension less than  ,

,  contains nonzero element and so

contains nonzero element and so

![\displaystyle \mathcal{F}(\mathbf{a}\mathbf{a}^\top) \in \mathcal{F}(V) \iff [\mathcal{F}(\mathbf{a}\mathbf{a}^\top)]_{ij}=0,\quad \forall (i,j) > (i_0,j_0) \,\text{in lexicographical order.}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cmathcal%7BF%7D%28%5Cmathbf%7Ba%7D%5Cmathbf%7Ba%7D%5E%5Ctop%29+%5Cin+%5Cmathcal%7BF%7D%28V%29+%5Ciff+%5B%5Cmathcal%7BF%7D%28%5Cmathbf%7Ba%7D%5Cmathbf%7Ba%7D%5E%5Ctop%29%5D_%7Bij%7D%3D0%2C%5Cquad+%5Cforall+%28i%2Cj%29+%3E+%28i_0%2Cj_0%29+%5C%2C%5Ctext%7Bin+lexicographical+order.%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

Now fix an  , we know

, we know ![{ [\mathcal{F}(\mathbf{a}\mathbf{a}^\top)]_{i_1j_1}=0}](https://s0.wp.com/latex.php?latex=%7B+%5B%5Cmathcal%7BF%7D%28%5Cmathbf%7Ba%7D%5Cmathbf%7Ba%7D%5E%5Ctop%29%5D_%7Bi_1j_1%7D%3D0%7D&bg=ffffff&fg=000000&s=0&c=20201002) means that there is some

means that there is some  (depending only on

(depending only on  ) such that

) such that

where  is the

is the  -th complement of

-th complement of  . Now if we fix

. Now if we fix  and only vary

and only vary  , then we have

, then we have

which holds for infinitely many  only if

only if

Thus we can repeat the argument as before and conclude that  holds for infinitely many

holds for infinitely many  with each component taking infinitely many values only if

with each component taking infinitely many values only if  . This contradicts the fact that

. This contradicts the fact that  is invertible and hence we must have

is invertible and hence we must have

Now proving  spans

spans  with probability

with probability  is easy. Because for each

is easy. Because for each  , the

, the  with

with  being drawn can be considered fixed because

being drawn can be considered fixed because  is drawn independently of

is drawn independently of  . But the probability of

. But the probability of  stays in the span of

stays in the span of  is

is  by previous result and hence

by previous result and hence  is linearly independent of all

is linearly independent of all  . This argument continues to hold for

. This argument continues to hold for  because the space spanned by

because the space spanned by  is at most

is at most  and so previous result applies. This completes the proof.

and so previous result applies. This completes the proof.

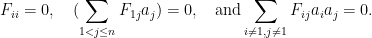

minimization problem:

are all positive and

. We call the optimziation problem (1) the least absolute deviation problem. Note the optimziation problem (1) has a single variable

. If

are all equal, then it is well-known that least absolute deviation has median of

as its minimizer. In the case of unequal weights

, we are going to show the median defined by the weights

is again the minimizer.

are ordered and distinct:

with respect to the weights

. For each

, we denote

,

, and

be the set of indices

such that

,

, and

respectively. Note that

is either a single index or an empty set.

is a median of

with respect to the weights

if

and

, where

if

is empty.

in the sense of Definition 1.

is optimal if and only if

, where

is the subdifferential of

at

, a generalization of derivative at

.

, the subdifferential at

is

if

is nonempty and

if

is empty. The addition in (4) should be understood as set addition:

.

is a convex function with whole domain

. Hence, an

is optimal if and only if

w.r.t.

. This can be computed first by ordering the

so that condition (3) is satisfied (combining same quantities). This procedure only takes

comparisons plus

operations in creating a new set of values

and their weights

of combining terms and adding weights up for the same

. Here

. Then we add

up until the

threshold is reached to determine

. This procedure again only takes

additions.